The language model landscape is constantly evolving, and Google’s GLaM represents a significant step forward in reducing computational costs in training large-scale density models. But is GLaM truly a noteworthy solution to the challenges of computation and energy in this field?

With 1.2 trillion parameters, GLaM surpasses GPT-3 in size and requires less energy during training. However, the question arises whether this large size truly reflects real progress in model performance. Even though only a small portion of parameters is activated during training, GLaM still consumes a significant amount of energy.

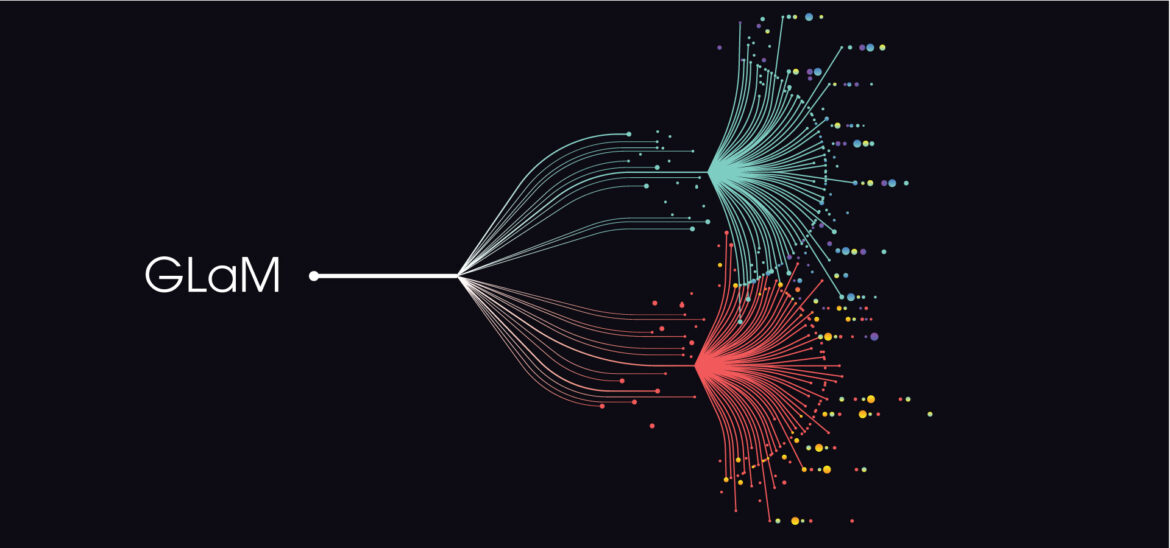

Another notable aspect of GLaM is its use of a sparsely activated mixture of experts architecture, where only a small portion of experts is activated for each input token. This reduces computational costs but also raises questions about the effectiveness of expert selection in each language task.

While GLaM promises to reduce computational costs and energy, carefully evaluating its actual performance compared to other models remains a challenge. More research is needed to assess both performance and environmental sustainability of GLaM compared to traditional and benchmark models like GPT-3.

In the future, developing language models needs to balance enhancing performance and minimizing negative environmental impacts, aiming to create reliable and sustainable solutions for the research community and end users.

Tác giả Hồ Đức Duy. © Sao chép luôn giữ tác quyền